The world of machine learning-based weather prediction (MLWP) is moving fast. Many developments, each of which builds on top of learnings from previous works, are published in rapid succession. In previous articles, we focused on the internals of MLWP models and on the first generation of these models. We’ve learned that there are currently three main routes for developing AI models – Vision Transformers, neural operators, and graph networks – and that Pangu-Weather, the FourCastNet series and GraphCast are using them quite successfully. But developments don’t end there.

Today, we continue our series on AI and weather forecasting by looking at the second generation of AI-based weather models. Specifically, we will introduce FengWu, FuXi and ECMWF’s own AIFS model. Compared to the first generation of AI-based weather models, they bring multimodality, cascade ML and attention as the primary novelties:

• FengWu considers all atmospheric variables to be of different modality. For each modality, it introduces dedicated encoder-decoder structures and a multi-task learning strategy. Additionally, it improves iterative forecasting via what is known as a ‘replay buffer’.

• FuXi uses a cascade approach, which uses different sub models for the short term, medium term, and longer term.

• ECMWF’s AIFS, specifically its second generation, uses attention-based graph neural networks for faster learning.

In this article, we zoom in on these developments in more detail. The explanations remain as intuitive as possible, to allow for building understanding instead of getting lost in details.

FengWu: a multimodal weather Transformer

Let’s begin with the FengWu model, developed by Chen et al. (2023) which are primarily of Shanghai Artificial Intelligence Laboratory. The FengWu development team recognizes that AI-based weather forecasting has made large strides in performance, but that forecasts are still limited compared to NWP models. Specifically, they argue that…

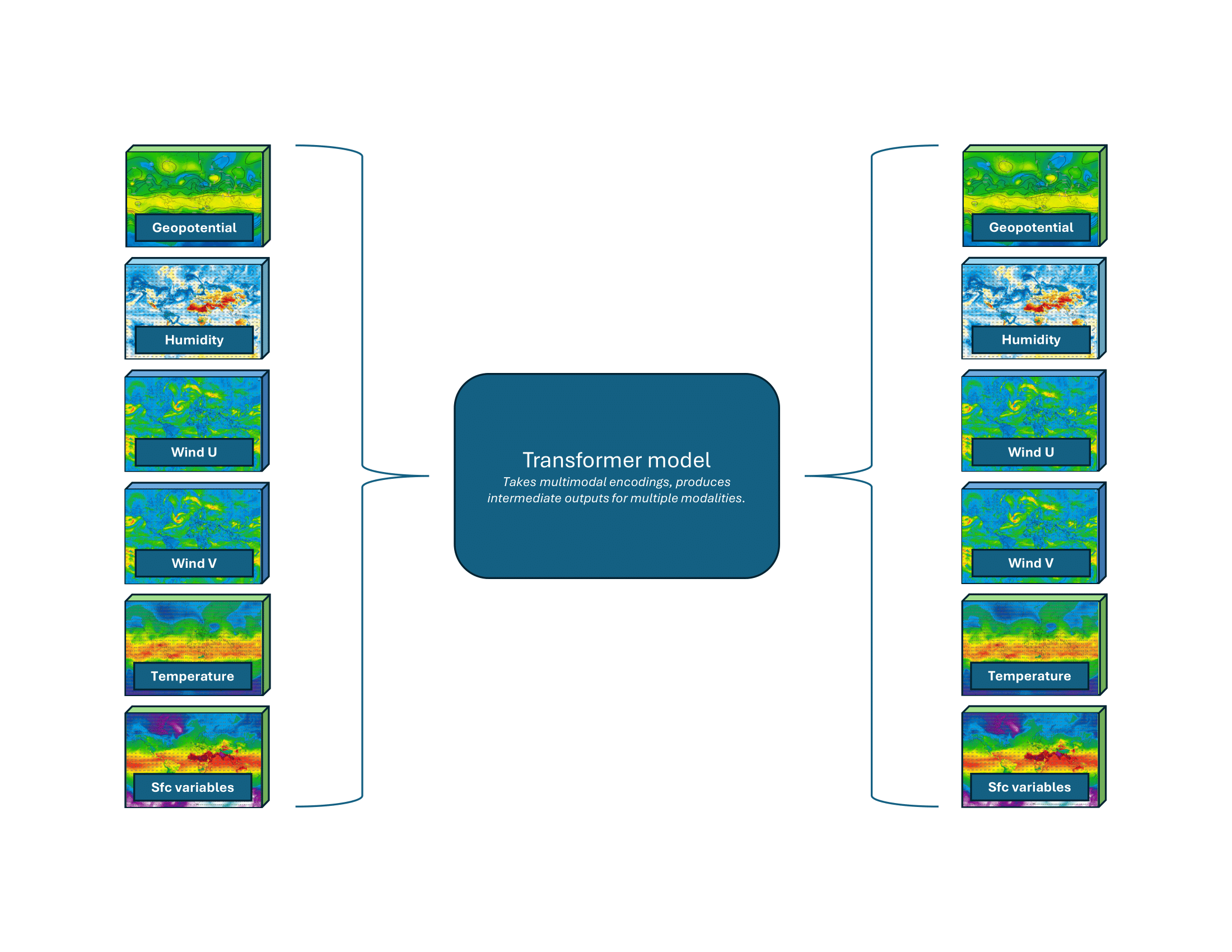

1. It could be better to treat the various atmospheric variables used by an AI weather model as different modalities. In other words, instead of stacking all variables on top of each other before feeding them through the model, each variable is first processed independently using a dedicated encoder, after which the processed variables (the encodings) are fused and fed through the model. Subsequently, using dedicated decoders, the output is converted back into a weather forecast for the next time step. The rationale for this could be that each encoder, when dedicated, learns to detect unique patterns present within its specific variable before the model considers all variables together.

2. Loss functions used when training the model for computing loss values (in AI weather prediction typically RMSE) don’t take into account that – according to the authors – predicting each variable is a separate task. Instead, they predict all variables at once. To solve this, the authors expand on the GraphCast work – which assigns manual weights indicating the importance of each task/variable – by letting FengWu discover these through learning, to ensure that the model learns to balance between variable importance.

3. The first generation of AI weather models typically produces forecasts autoregressively: the forecast for +3 hours is used as input for generating the forecast for +6 hours, and so forth. This typically leads to significant error accumulation and blurry forecasts in the longer term. GraphCast already attempts to solve this by partially incorporating autoregression in the training phase, but this is not sufficient to resolve the problem entirely. FengWu introduces the concept of a replay buffer, which stores intermediate predictions temporarily for use during training. Thus, contrary to GraphCast’s setup, which extends training to autoregressive prediction for 12 time steps at maximum, FengWu is able to train for longer lead times too.

The FengWu AI-based weather model uses modality-specific encoders and modality-specific decoders in combination with a Transformer model. Inspired by Chen et al. (2023).

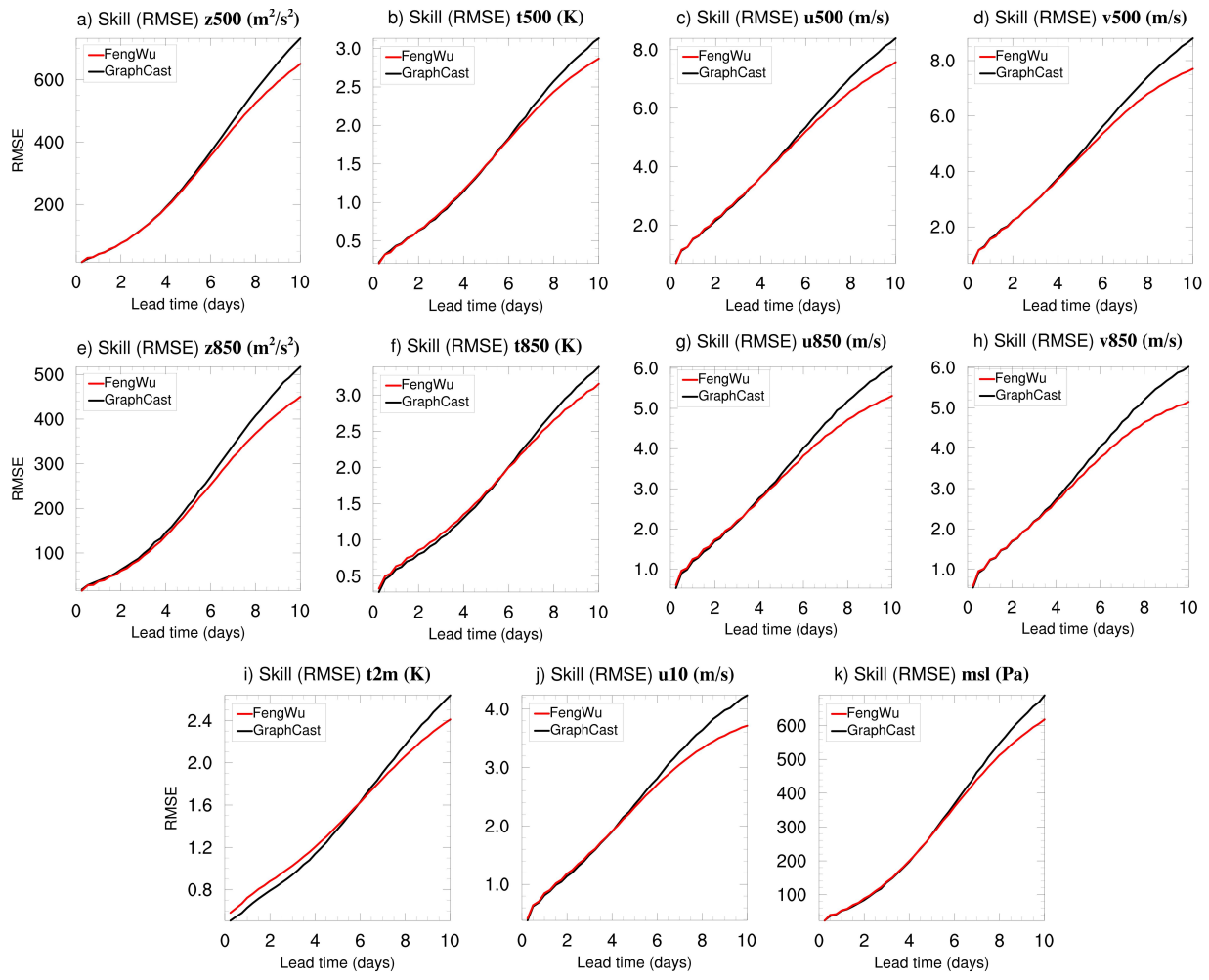

The FengWu AI-based weather model uses modality-specific encoders and modality-specific decoders in combination with a Transformer model. Inspired by Chen et al. (2023).Unfortunately, FengWu’s performance is not benchmarked in the WeatherBench framework (which will be discussed in detail in the next article of this series). Still, in the authors’ work, a comparison between FengWu and GraphCast is reported. Looking at the RMSE scores illustrating average model performance, it can be observed that FengWu indeed improves longer term performance, typically not at the cost of shorter-term performance (except for the two-meter temperature; Chen et al., 2023).

RMSE values comparing the FengWu model with GraphCast. Image from Chen et al. (2023).

Something that we will highlight in more detail in forthcoming articles is the amount of work already built on top of the base FengWu model. For example, Xiao et al. (2023) looked at combining FengWu with the 4D-variational data assimilation process (4DVar), allowing for observations to be incorporated into the model directly instead of relying on NWP analyses.

What’s more, Han et al. (2024) introduced FengWu-GHR, which intelligently combines multiple datasets and machine learning techniques to forecast weather at 0.09-degree horizontal resolution, which is approximately 9 kilometers. As mentioned, we will look at these developments in the next articles of this series.

FuXi: a cascade modelling approach

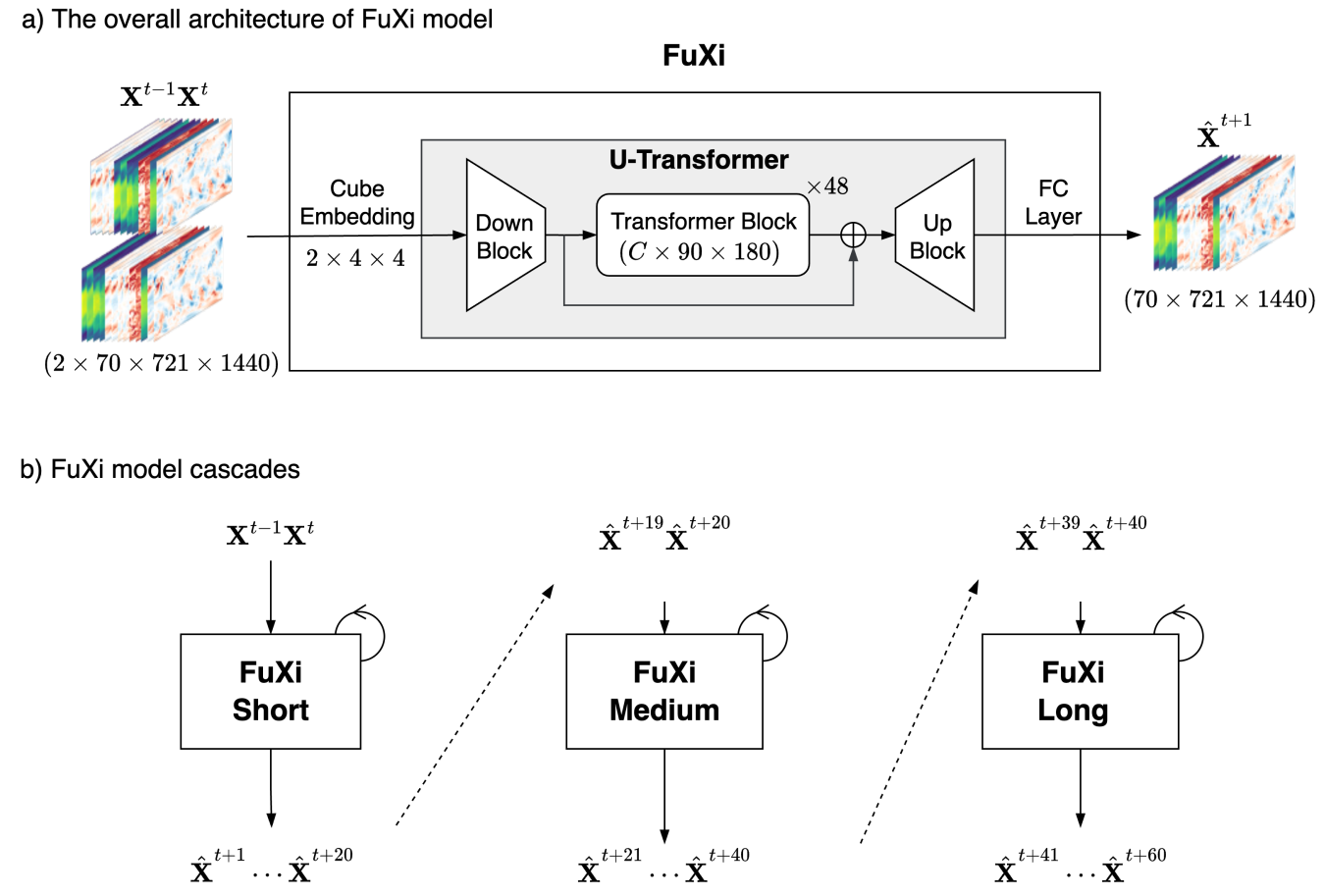

The creators of the FuXi model also recognized the problem related to longer-term forecast performance (Chen et al., 2023b). They argued, however, that improving longer-term forecast performance by means of multi-step autoregressive training (as done by e.g. GraphCast and FengWu) may come at the cost of shorter-term forecast performance.Hence, instead of adapting the training process to consider autoregressively generated forecasts, they took a different approach by training three separate models and cascading them (i.e. merging their forecasts into each other). Named FuXi Short, FuXi Medium and FuXi Long, the models start from a pretrained FuXi model learning to predict just one time step into the future. Subsequently, FuXi Short is trained by increasing the number of predicted time steps during the training process. This model is then used for creating FuXi Medium (specifically focused on the medium term) and that one is used for training FuXi Long (for the long term).

FuXi is a Transformer-based model, but forecast generation happens with three separate models trained on either the short term (timesteps 0 to 20), medium term (timesteps 21 to 40) or long term (timesteps 41 to 60). Image from Chen et al. (2023b).

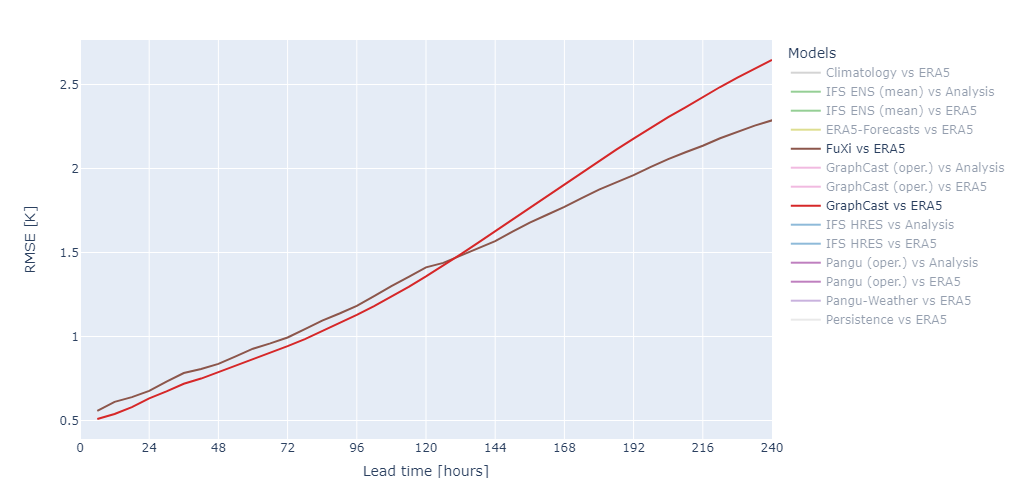

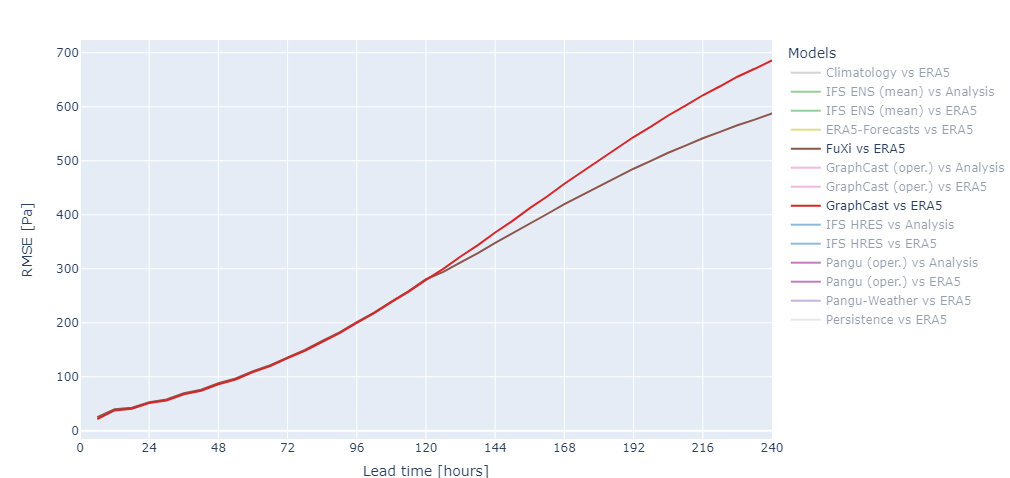

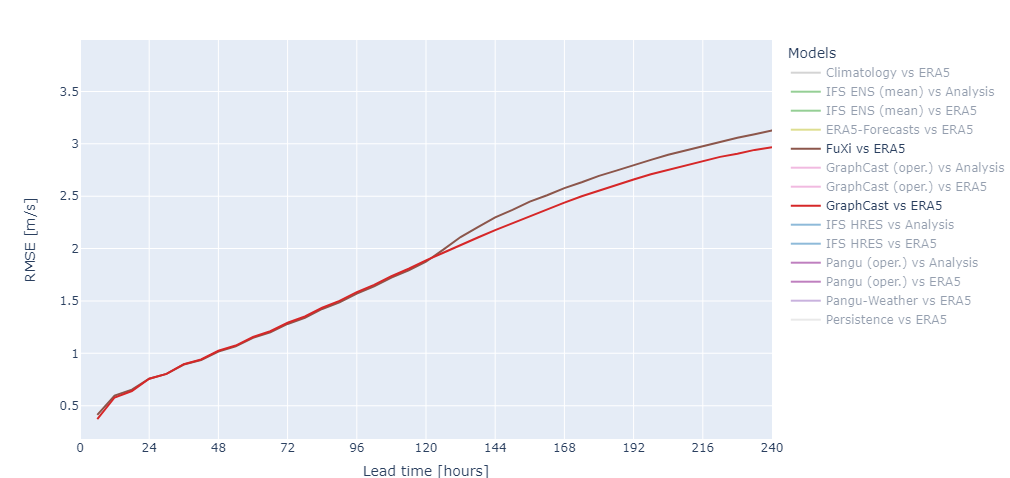

Combined with a few architectural changes, the training approach does indeed seem to bring improvements, but especially for more global weather patterns. For example, in the analyses brought forward by WeatherBench (2024), in comparison to GraphCast, it’s visible that FuXi has lower RMSE at longer lead times for many variables, such as sea-level pressure and 2-meter temperature. However, these improvements aren’t free all the time – for 2-meter temperature, the results indicate that FuXi Short performs more poorly compared to GraphCast. Interestingly, the power spectra suggest that FuXi has more power than GraphCast for this time range and variable. In other words, even though the forecasts are sharper (i.e. have more detail), they are also more wrong. Similarly, for 10-meter wind speed, FuXi Long seems to perform worse in comparison.

A cherry-picked set of FuXi vs GraphCast comparisons from WeatherBench, using the same initial conditions. Displayed are global scores for sea-level pressure (top), 2-meter temperature (middle) and 10-meter wind speed (bottom) analyzed at highest possible resolution for 2020.

ECMWF AIFS: adding attention to graph neural networks

Recognizing the developments of MLWP technology and specifically the development of Pangu-Weather, FourCastNet and GraphCast as first generation or FengWu and FuXi as second generation AI weather models, ECMWF chose to start developing an in-house model as well. It is part of ECMWF’s “new ML project, which began in summer 2023 and aims to expand [its] applications of machine learning to Earth system modelling” (ECMWF, 2023).

In October 2023, ECMWF launched the alpha version of its Artificial Intelligence/Integrated Forecasting System or AIFS for short. It used graph neural networks as it was inspired by GraphCast and produced forecasts at approximately 1 degree resolution for wind, temperature, humidity, and geopotential at 13 pressure levels as well as for various surface-level variables. Analyses showed that this alpha version, like the other AI-based weather models, could already be competitive for some variables especially in the long term.

These developments were followed by an update to AIFS released in January 2024. The architecture was altered to use attention-based graph neural networks, the grid projection was changed and sliding attention was introduced. What’s more, the model changed from 1-degree to 0.25-degree resolution.

Today, AIFS scores are not available in WeatherBench. However, ECMWF’s own analysis suggests that especially the updated model has competitive performance compared to other AI-based models like GraphCast and Pangu-Weather, but also to the NWP-based IFS.

RMSE for northern hemisphere 2-meter temperature between various AI-based weather models, including the AIFS alpha version (light blue) and updated version (blue). In this case, AIFS shows competitive performance in comparison to other AI-based weather models and the IFS. Chart from ECMWF (2024).

AI developments: a meteorologist’s response

As described above, developments in AI weather modeling are progressing rapidly. New models are emerging continuously, and current AI models are being improved at a fast pace. These developments seem to be advancing much quicker than the development of current NWP models. This sometimes makes it challenging for us as meteorologists to stay updated with the latest advancements. To share as much knowledge on this subject as possible, we at Infoplaza undertake several initiatives.

Firstly, annual events called Meteo Fora are organized. During these events, employees from the company speak about their expertise and share their knowledge with the rest of the company. Additionally, quarterly reviews are held where new developments or new products are announced. Developments in AI weather modeling are frequently discussed during these reviews. Furthermore, communication lines between different branches within Infoplaza are short. This often leads to interesting conversations at the coffee machine between meteorologists and modelers about the rise of AI in weather modeling.

The reactions of our meteorologists to the developments in AI weather forecasting are mainly hopeful. Meteorologists are always seeking the best data to create the most accurate weather forecasts. If AI can assist in this, we are eager to utilize it. However, it is essential that we learn to understand these models as thoroughly as we currently understand the existing NWP models. Understanding a model is crucial for making an accurate weather forecast. Just like NWP models, MLWP models are not perfect. Therefore, it is crucial for a meteorologist to thoroughly understand such a model so that they can manually adjust the model's outputs before they appear in the weather forecast. For this reason, we as meteorologists closely follow the developments within our modelers' department.

What’s next: WeatherBench, using observations and predicting extreme weather events

In the forthcoming articles in this series on weather forecasting with AI, we’ll extend our focus from the current generations of AI models to what is to be. Firstly, we’ll dive deeper into the WeatherBench framework, which is the de facto standard for evaluating AI-based weather models. Then, we look at going beyond analyses – by introducing methods for using observations more directly. This is followed by studies attempting to mitigate the problem that AI-based weather models struggle with extreme weather situations.

Evaluating AI-based weather models with WeatherBench

Introduces the WeatherBench benchmarking suite, which can be used to consistently evaluate AI-based weather models. What’s more, it allows for comparison between models.

Going beyond analyses - using observations more directly

Today’s AI-based weather models are reliant on NWP analyses – but new approaches are trying to work around this limitation.

Better capturing extremes with sharper forecasts

One of the downsides of AI-based weather models is increased blurring further ahead in time as well as difficulty capturing extreme weather events. Similarly, new approaches have emerged attempting to mitigate this problem.

References

Chen, K., Han, T., Gong, J., Bai, L., Ling, F., Luo, J. J., ... & Ouyang, W. (2023). Fengwu: Pushing the skillful global medium-range weather forecast beyond 10 days lead. arXiv preprint arXiv:2304.02948.Chen, L., Zhong, X., Zhang, F., Cheng, Y., Xu, Y., Qi, Y., & Li, H. (2023b). FuXi: a cascade machine learning forecasting system for 15-day global weather forecast. npj Climate and Atmospheric Science, 6(1), 190.

ECMWF. (2023). ECMWF unveils Alpha version of new ML model. https://www.ecmwf.int/en/about/media-centre/aifs-blog/2023/ECMWF-unveils-alpha-version-of-new-ML-model

ECMWF. (2024). First update to the AIFS. https://www.ecmwf.int/en/about/media-centre/aifs-blog/2024/first-update-aifs

Han, T., Guo, S., Ling, F., Chen, K., Gong, J., Luo, J., ... & Bai, L. (2024). FengWu-GHR: Learning the Kilometer-scale Medium-range Global Weather Forecasting. arXiv preprint arXiv:2402.00059.

Deterministic scores – WeatherBench2. (2024). Deterministic scores – WeatherBench2. https://sites.research.google/weatherbench/deterministic-scores/

Xiao, Y., Bai, L., Xue, W., Chen, K., Han, T., & Ouyang, W. (2023). FengWu-4DVar: Coupling the Data-driven Weather Forecasting Model with 4D Variational Assimilation. arXiv preprint arXiv:2312.12455.

Interested in our upcoming articles about AI and marine weather modeling? Subscribe to our marine newsletter and don't miss a thing.